The digital age has revolutionized how we access and consume information, offering unparalleled convenience and breadth. However, this abundance of data also presents significant challenges in verifying the reliability and authenticity of online content. South Korea’s leading search engine, Naver, has come under scrutiny for the declining trustworthiness of its search results. Users increasingly encounter content saturated with advertisements and biased reviews, making it difficult to discern genuine information. Additionally, the rise of AI-generated content has further muddled the waters, raising concerns about misinformation and the integrity of online information.

With Naver’s algorithms like Deep Intent Analysis (DIA) and Creator Rank (C-Rank), the platform prioritizes user-preferred and fresh content, but this has inadvertently led to an environment where advertisements and sponsored content often overshadow genuine user reviews. Compounding this problem is the increasing prevalence of AI-generated content, which, while efficient, can propagate misinformation and degrade the overall quality of information available online. As we face these challenges, it becomes crucial to develop strategies for effectively identifying and promoting reliable information.

The Decline of Reliable Information on Naver

Naver, South Korea’s dominant search engine, has undergone several algorithmic changes aimed at improving user experience and content relevance. Initially, Naver relied on basic ranking algorithms, but over time, it introduced more sophisticated systems such as the Deep Intent Analysis (DIA) and Creator Rank (C-Rank) algorithms. The DIA algorithm focuses on understanding user intent and preferences, promoting content that aligns with these factors. The C-Rank algorithm, on the other hand, evaluates content based on its creator’s credibility and the engagement it receives, such as likes, shares, and comments.

While these algorithms aim to surface high-quality and relevant content, they have also led to unintended consequences. The emphasis on user preferences and engagement metrics often results in the promotion of content that is popular but not necessarily accurate or unbiased. This has created an environment where newer, potentially more informative content struggles to gain visibility against well-established but possibly outdated posts.

One of the significant challenges with Naver’s current system is the prevalence of user-generated content, particularly from blogs and cafes, that is heavily influenced by advertisements and sponsorships. Many bloggers and content creators receive compensation for promoting products and services, which can skew their reviews and recommendations. This commercial bias is not always transparent to users, leading to a dilution of trust in the information provided.

Moreover, the integration of advertisements directly into search results further complicates the user experience. Sponsored content often appears alongside or even above organic results, making it difficult for users to distinguish between genuinely useful information and paid promotions. This issue is exacerbated by the design of Naver’s search results pages, which include a variety of content types—such as videos, images, and user reviews—that can make it challenging to identify the most reliable sources of information.

The combined effect of these factors is a decline in the overall quality of information available on Naver. Users find it increasingly difficult to trust the search results they receive, as the line between unbiased information and commercial content becomes blurred. This erosion of trust is particularly concerning in fields where accurate information is crucial, such as healthcare, finance, and technology.

Furthermore, the focus on engagement metrics and user preferences can create echo chambers, where users are repeatedly exposed to similar types of content that reinforce their existing views and preferences. This can limit the diversity of information and perspectives available to users, reducing their ability to make well-informed decisions.

While Naver’s algorithmic advancements aim to enhance user experience by prioritizing relevant and popular content, they have also contributed to a landscape where reliable and unbiased information is harder to find. The prevalence of commercial influences and the blending of organic and sponsored content further complicate users’ ability to discern trustworthy information, undermining the overall quality and reliability of the platform’s search results.

The Rise of AI-Generated Content

Artificial intelligence has revolutionized various industries, and content creation is no exception. AI algorithms can generate articles, reviews, and even social media posts with remarkable efficiency. Platforms like Naver have increasingly integrated AI to handle the vast amounts of data and content generated daily. While this technology can streamline content creation, it also raises significant concerns about the quality and reliability of the information produced.

AI-generated content often lacks the nuanced understanding and critical analysis that human authors bring. This can lead to the dissemination of misleading or incorrect information. Moreover, AI systems can inadvertently perpetuate biases present in their training data, leading to content that may reinforce stereotypes or present a skewed perspective. For example, AI-generated reviews might favor products or services that align with prevailing market trends, rather than providing an objective assessment.

TikTok, a platform renowned for its short, engaging videos, exemplifies the potential pitfalls of AI-generated and algorithm-driven content. Many TikTok videos, including those recommending restaurants or products, are created or influenced by AI algorithms that prioritize engagement metrics. This has led to a proliferation of sponsored content that is often indistinguishable from genuine user-generated recommendations.

The platform’s emphasis on virality means that content creators are incentivized to produce videos that attract the most views and likes, often at the expense of accuracy and authenticity. As a result, users are frequently exposed to content that is more entertaining than informative, further blurring the lines between genuine recommendations and advertisements. This trend is particularly concerning given TikTok’s popularity among younger audiences, who may be less discerning about the sources of their information.

The rise of AI-generated content poses significant risks for the quality of information available online. AI systems can generate vast amounts of content quickly, but this quantity often comes at the expense of quality. Without rigorous fact-checking and editorial oversight, AI-generated content can easily spread misinformation. This is especially problematic in areas such as health, finance, and politics, where accurate information is crucial.

Furthermore, as AI systems continue to learn from existing data, they risk perpetuating and amplifying existing biases and inaccuracies. For instance, if an AI system is trained on data that includes a high volume of sponsored content, it may prioritize similar content in the future, further diluting the overall quality of information. This creates a feedback loop where low-quality or biased information becomes more prevalent, making it increasingly difficult for users to find reliable and objective content.

The Consequences of Misinformation

The infiltration of misinformation into popular search engines and content platforms significantly undermines consumer trust. When users encounter misleading or biased information, especially from sources they have historically relied upon, their confidence in the platform’s reliability is eroded. This trust deficit is particularly pronounced when users feel that the information has been manipulated for commercial gain, as seen with the prevalence of sponsored content on Naver and TikTok.

For instance, when users seeking unbiased product reviews or health information encounter predominantly sponsored posts, they begin to question the authenticity of all content on the platform. This skepticism can extend beyond individual platforms, fostering a general mistrust of online information. The erosion of trust has tangible repercussions: consumers may become less likely to engage with online content, reducing the effectiveness of digital platforms as information resources.

The consequences of misinformation are not confined to the digital realm; they can have serious real-world implications. For example, inaccurate health information can lead to harmful practices and reluctance to follow medical advice. During the COVID-19 pandemic, misinformation spread through various online platforms contributed to vaccine hesitancy and the proliferation of ineffective or dangerous treatments. This misinformation often originated from seemingly credible sources, further complicating the public’s ability to discern reliable information.

In the financial sector, misleading information about investments or economic conditions can result in poor financial decisions, leading to significant economic losses for individuals. Similarly, political misinformation can influence election outcomes, erode democratic processes, and fuel social discord. The 2016 U.S. presidential election and the Brexit referendum are notable examples where misinformation played a pivotal role in shaping public opinion and voting behavior.

One of the most insidious aspects of misinformation is its potential to create a feedback loop with AI learning systems. AI algorithms rely on vast amounts of data to learn and improve. When this data is tainted with misinformation, the AI systems can inadvertently reinforce and propagate these inaccuracies. This feedback loop is particularly problematic in search engines and social media platforms, where AI-driven content recommendations play a crucial role in shaping user experiences.

As AI systems increasingly prioritize content that generates high engagement—often sensationalist or biased material—they perpetuate the spread of misinformation. This not only degrades the quality of information available but also biases the AI’s future learning processes. Over time, this can result in a compounding effect, where the prevalence of misinformation grows, further contaminating the data pool from which AI systems learn.

The long-term implications of widespread misinformation are profound. As AI systems and algorithms continue to evolve, their reliance on accurate data becomes even more critical. If the foundational data is flawed, the outputs will be equally compromised, affecting everything from search engine results to automated decision-making processes in various industries.

In addition to degrading the quality of information, the feedback loop of misinformation can exacerbate societal divisions. By amplifying biased or extreme viewpoints, AI systems can contribute to the polarization of public discourse, making it more challenging to reach consensus on critical issues. This polarization can hinder effective policymaking and erode the social fabric, making it difficult to address collective challenges such as climate change, public health, and economic inequality.

Evaluating Naver’s Reliability and the Role of AI in Information Quality

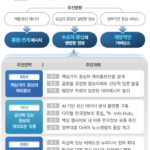

In today’s digital age, the quality and reliability of online information are under significant threat from a variety of sources. Naver, South Korea’s leading search engine, exemplifies many of the challenges faced by modern digital platforms. Despite advanced algorithms like Deep Intent Analysis (DIA) and Creator Rank (C-Rank), the prevalence of advertisement-driven content and biased reviews has undermined user trust in the platform. Furthermore, the rise of AI-generated content, while offering efficiency, has introduced new avenues for misinformation, complicating the landscape of digital information.

The consequences of these developments are far-reaching. Misinformation can erode consumer trust, lead to real-world negative outcomes, and create feedback loops that further degrade the quality of information available online. Addressing these issues requires a concerted effort from both users and digital platforms. Users must develop critical skills for evaluating the reliability of sources, while platforms need to enhance the transparency of their algorithms and encourage ethical content creation.

Practical steps for users include cross-referencing information, utilizing fact-checking tools, and educating themselves on digital literacy. For platforms, disclosing ranking criteria, conducting regular algorithm audits, and clearly labeling sponsored content are crucial measures to improve the trustworthiness of their content.

As we navigate this evolving digital landscape, it is imperative to prioritize the accuracy and integrity of the information we consume and share. By adopting these strategies, we can foster a more reliable and trustworthy online environment, ensuring that digital platforms continue to serve as valuable resources for knowledge and information.